- Measurement of the extent to which data collectors (raters) assign the same score to the same variable is called interrater reliability (extent of agreement among data collectors)

- Interrater reliability is a concern to one degree or another in most large studies due to the fact that multiple people collecting data may experience and interpret the phenomena of interest differently

- While there have been a variety of methods to measure interrater reliability, traditionally it was measured as percent agreement, calculated as the number of agreement scores divided by the total number of scores; but it will not account for chance agreement. i.e. it does not take account of the possibility that raters guessed on scores. Cohen’s kappa was developed to account for this concern

- Cohen’s kappa, symbolized by the lower case Greek letter, κ is a robust statistic useful for either interrater or intrarater reliability testing.

- Similar to correlation coefficients, it can range from −1 to +1, where 0 represents the amount of agreement that can be expected from random chance, and 1 represents perfect agreement between the raters. While kappa values below 0 are possible, they are unlikely in practice

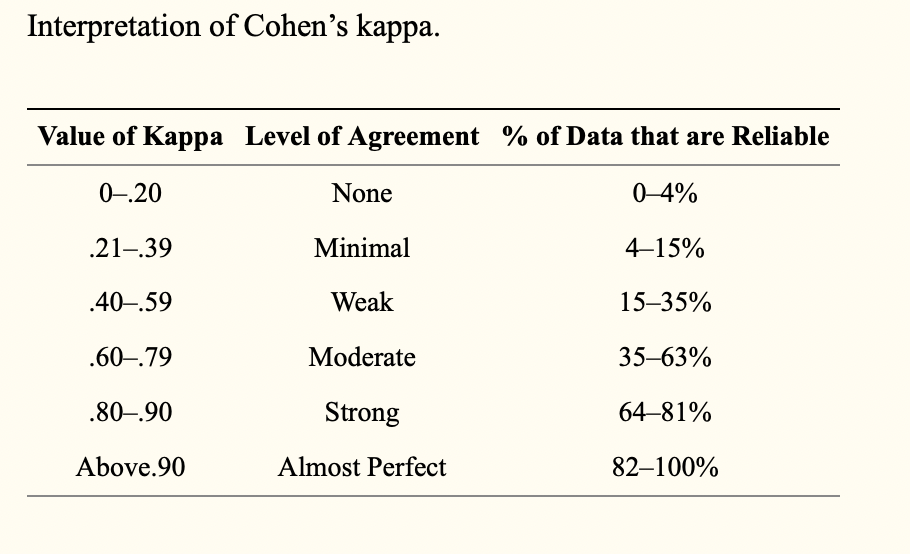

- Cohen suggested the Kappa result be interpreted as follows: values ≤ 0 as indicating no agreement and 0.01–0.20 as none to slight, 0.21–0.40 as fair, 0.41– 0.60 as moderate, 0.61–0.80 as substantial, and 0.81–1.00 as almost perfect agreement. But there are many arguments against this classification and a modified classification has been recommended

- Any kappa below 0.60 indicates inadequate agreement among the raters and little confidence should be placed in the study

- Kappa may lower the estimate of agreement excessively. Also it cannot be directly interpreted, and thus it has become common for researchers to accept low kappa values in their interrater reliability studies.

- Low levels of interrater reliability are not acceptable in health care or in clinical research, especially when results of studies may change clinical practice in a way that leads to poorer patient outcomes. The best advice for researchers is to calculate both percent agreement and kappa. If there is likely to be much guessing among the raters, it may make sense to use the kappa statistic, but if raters are well trained and little guessing is likely to exist, the researcher may safely rely on percent agreement to determine interrater reliability.